Background:

Artificial intelligence (AI) has begun reshaping software development and testing practices, particularly where scalability, precision, and efficiency are paramount.

Government agencies struggle with massive amounts of unstructured data that need to be verified, processed, and analyzed, but it is often difficult and expensive to do so. The team at REI Systems has leveraged a variety of customized AI tools and platforms in mission-focused ways, developing solutions for customers like the Department of Defense (DoD) that could neither be addressed with commercial off-the-shelf (COTS) products nor cost-effective to build from scratch.

Recently, REI leveraged advancements in AI to develop an innovative approach to

test automation to address a defense customer’s need to evaluate more than 60,000 test cases for hundreds of applications documented in various formats—Microsoft Word, Excel, XML, among others—with varying levels of detail and consistency. The team brought together Generative AI (GenAI), AI coding assistants, and AI-powered test automation to deliver a

holistic, fully functional solution that resulted in faster, more automated delivery at significantly reduced costs.

Challenge:

The traditional software development lifecycle is often labor-intensive, time-consuming, and expensive. Testing is a critical phase in that lifecycle, ensuring that applications function correctly and that high-quality products are released to end users.

Defense agency leaders recognized that manual testing was unrealistic due to the sheer number of test cases. For some applications, it could take weeks for a team of testers to complete a full regression test. With automation, the entire regression suite can be completed in a matter of days without human intervention. However, automating these test cases, which means parsing through and analyzing hundreds of thousands of documented test scenarios and developing their automation, is a monumental task.

Selenium is one of the most popular web automation frameworks and, as an open-source tool, has been the de-facto standard for test automation. However, there were a few key drawbacks when standardizing on Selenium, particularly for this use case:

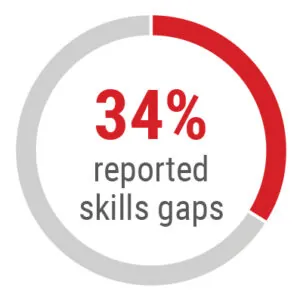

The coding effort required for developing automated test scripts in Selenium is costly and requires specialized skills. In a 2023 Gartner Peer Community survey of decision-making leaders in automated software testing, 34% reported gaps in automation skills.

- The introduction of AI and ML in modern tools has introduced a slew of capabilities aimed at improving optimization and efficiencies, which are non-existent in Selenium.

Approach:

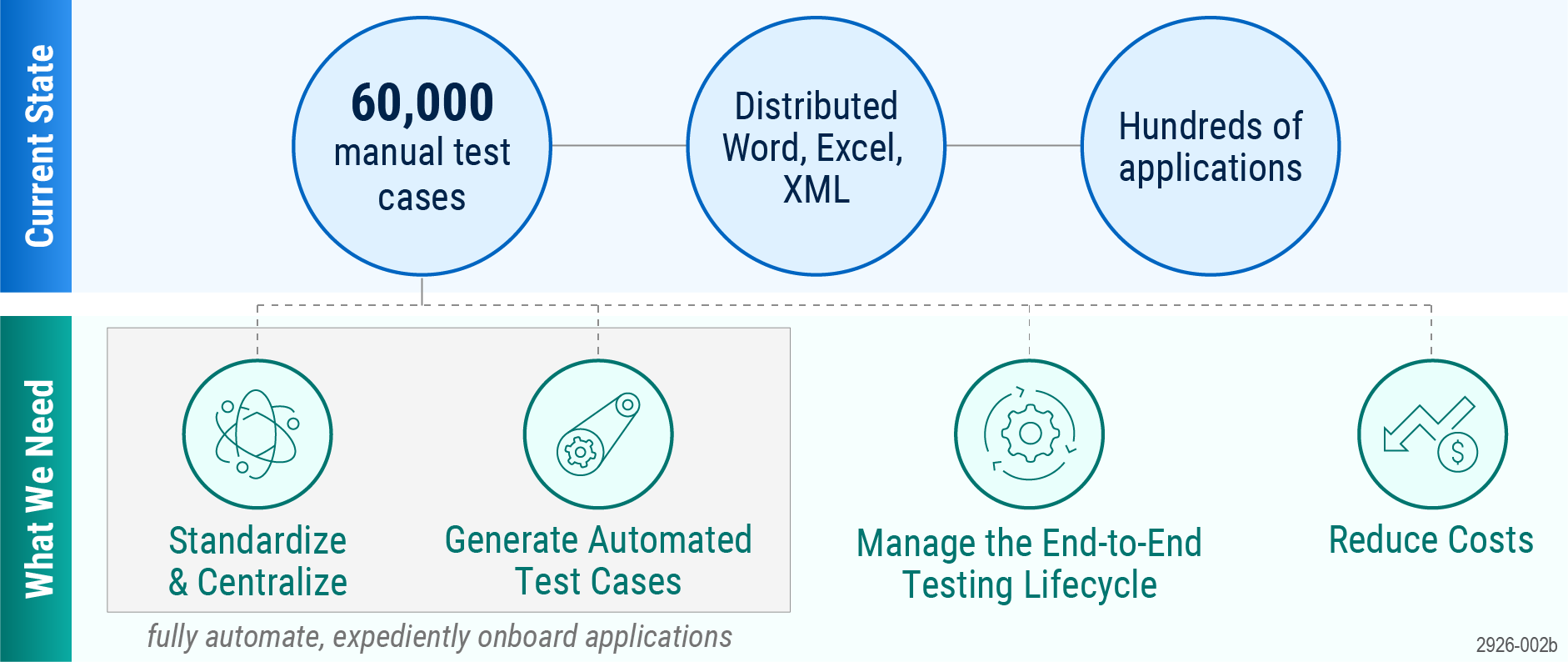

Our team identified four core objectives for addressing this particular use case, also highlighted in the figure below:

- Standardize and Centralize Test Cases: Instead of managing and executing test cases inconsistently by individual application teams, standardizing and consolidating them in a centralized tool would make them easier to maintain, track, and execute, thus reducing costs and improving efficiencies in the long run.

- Generate Automated Test Cases: Automating the substantial number of test cases requires a large team of test automation engineers and developers, so the ability to automatically or programmatically generate the automated tests was required.

- Manage the End-to-End Testing Lifecycle: These automated tests required full visibility, management, and reporting.

- Reduce Costs: All the above should be achieved while reducing labor costs, minimizing the number and cost of required software licenses, and keeping ongoing maintenance costs to a minimum.

Fig. 1: The challenge of developing a cost-effective solution for a large-scale problem

Enter Artificial Intelligence

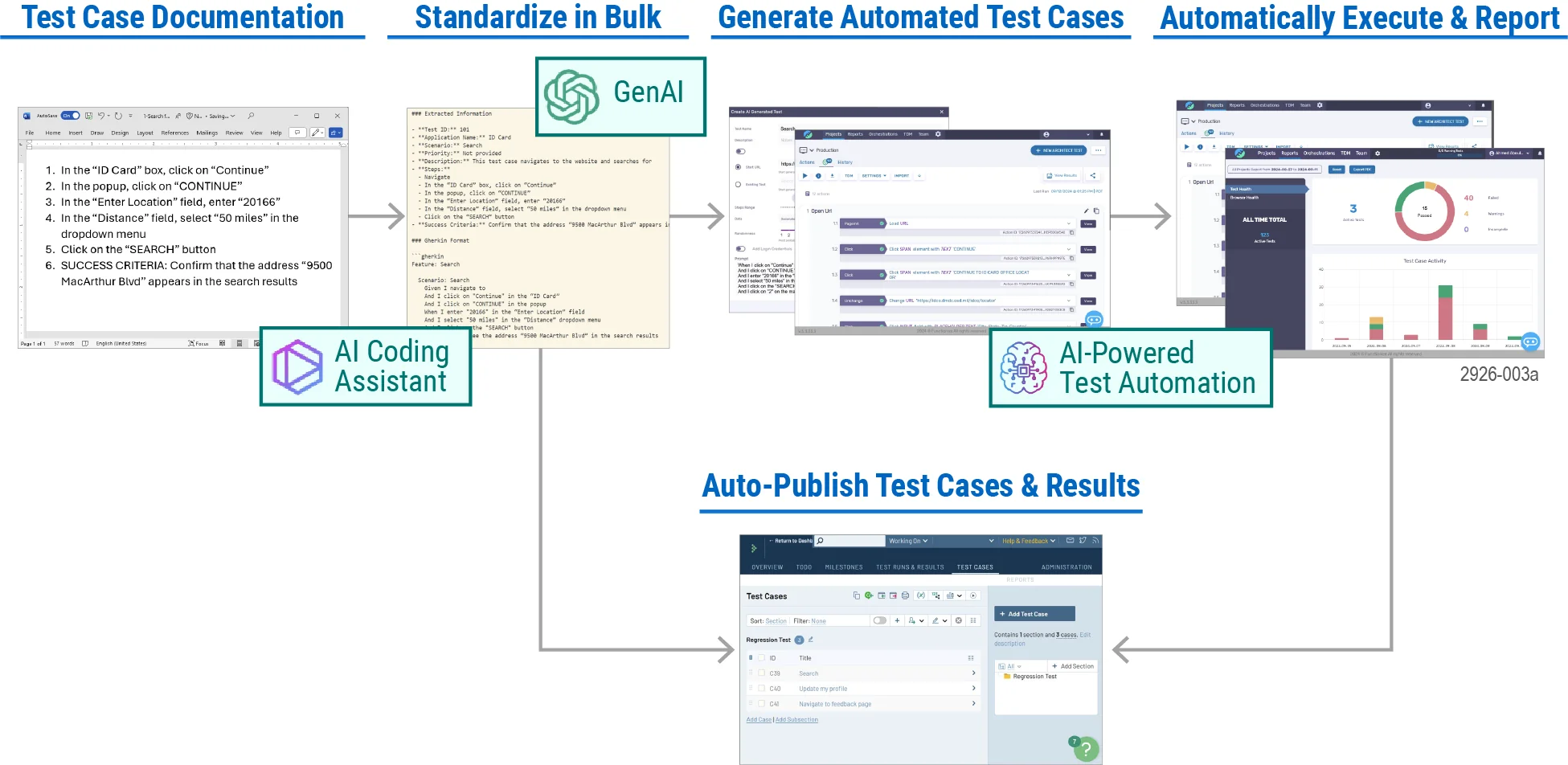

Our proposed approach leveraged recent advancements in AI, aligning with targeted goals of automating development and accelerating delivery. For our pilot, we utilized an integrated approach of the following AI capabilities to deliver a holistic solution:

- Generative AI: Used to read, parse, and analyze source documents, standardizing them into a predefined output.

- AI Coding Assistant: For accelerated development of the generative AI code piece.

- AI-Powered Test Automation Platform: To create and execute automated test cases without human intervention.

Fig. 2: How multiple AI capabilities came together to deliver a holistic solution

The figure above illustrates the approach’s workflow. The non-standard, semi-structured, manually documented test cases were fed into a GenAI model, which performed the analysis and standardization a human would have otherwise performed. The code needed for this was created with the aid of an AI coding assistant to accelerate the development. The standardized output was then pushed into an AI-powered test automation platform, which created the automated tests within the tool without the need for human intervention.

Generative AI: Generating Output at Scale

We used GenAI to read, parse, and analyze the source documents after training the gpt-4o model. The model’s training included a specification of the expected source metadata and data, potential variations, and warnings against the unpredictability of this data. It was also pointed to the online Gherkin Reference documentation. The extracted information was standardized into a predefined output.

This approach allowed us to process the source information in bulk and did not require manual intervention by a human analyst.

AI Coding Assistants: Accelerating Development

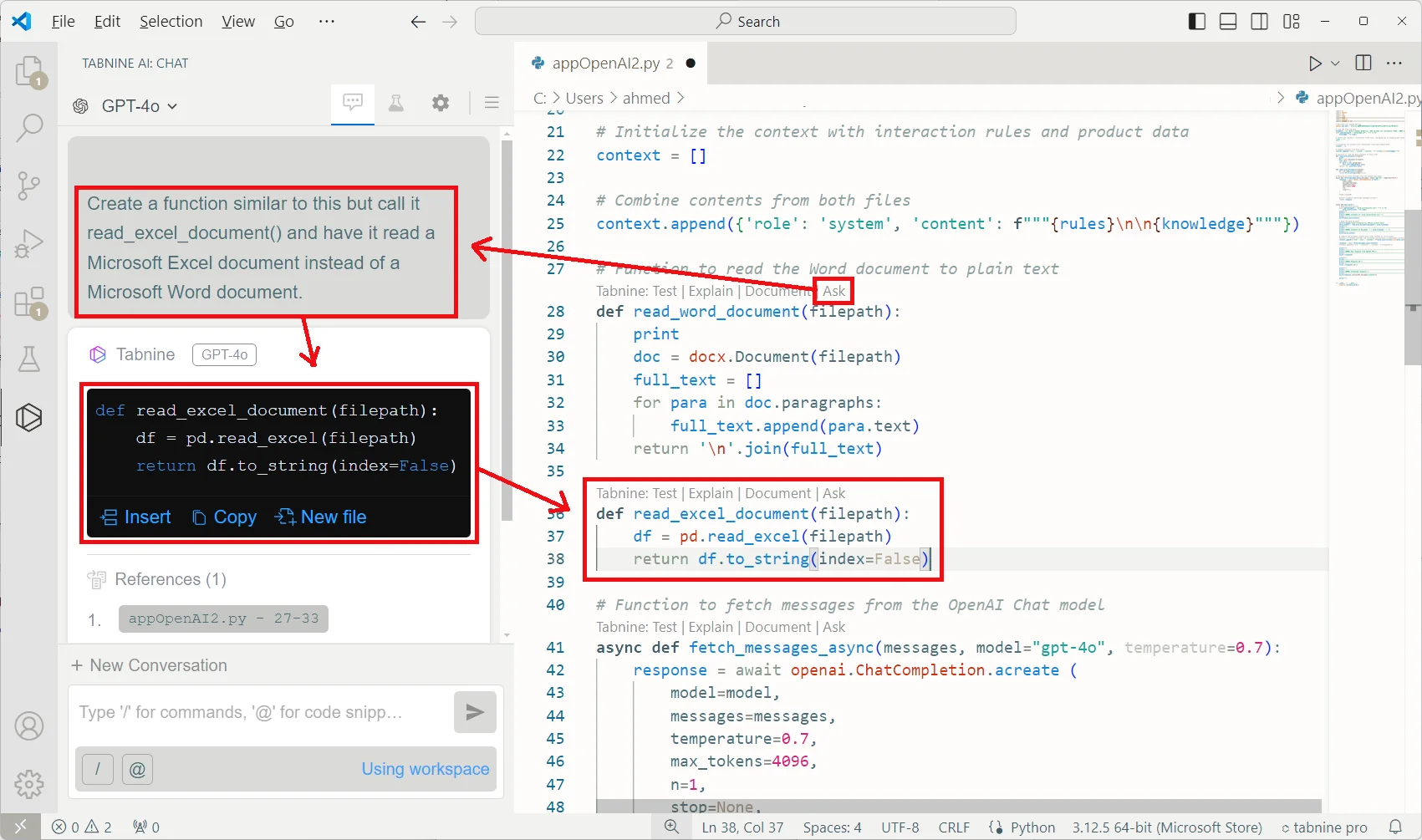

Numerous AI coding assistants have appeared in the market over the last few years. The on-prem version of Tabnine, one of the popular ones, was leveraged during our development. Tabnine is a plugin installed on the Visual Studio Code integrated development environment used by our developers.

Fig. 3: Example of how the “Ask” function of the AI coding assistant generated code for a new function

Tabnine did not create most of the code from scratch, but it accelerated our development, helped troubleshoot issues faster, and enabled us to release our application quicker.

AI-Powered Test Automation: Automating Test Creation

AI-Powered Test Automation: Automating Test Creation

We are seeing an unprecedented level of investment in AI within the information technology industry, and test automation platform vendors have taken note. AccelQ, Functionize, Appvance, Katalon, LambdaTest, Testim, Applitool, TestSigma, and others have already been accelerating the incorporation of AI and ML within their product stacks.

Some of the AI/ML capabilities developed within these testing tools include:

- Autonomous Script Generation: Converting natural language and understanding intentions to create automated tests without human intervention.

- Self-Healing: Leveraging AI and ML to autonomously repair broken test cases that may occur due to interface or environment changes without requiring manual fixes. The more the system interacts with test case scenarios, the more the model learns, improving its self-healing capabilities.

- Test Data Generation: Generating and mocking test data mimicking a real-world dataset is time-consuming. The poorer the quality of the test data, the lower the quality of the testing. GenAI is now being used to create this data.

- Anomaly Detection / Predictive Analysis: Anticipating script changes is aided with ML to help proactively detect anomalies or patterns that may indicate issues.

Furthermore, Functionize and TestRail can integrate natively, allowing us to track the results automated with Functionize along with the rest of our testing efforts, though it had not been set up yet.

Impact:

The three AI-based approaches came together to provide not only a fully automated solution but also the actual development of the solution itself! Any other alternative would have been unrealistic at the scale needed for this customer use case. The most notable impacts are:

Increased Developer Productivity: Our development team reported up to an estimated 70% increase in productivity using the AI coding assistant. Less time was spent researching functions, parameters, and syntax, and more time was saved figuring out API calls to external services.

- Reduced Human Element: A team of analysts, testers, and developers is no longer needed to parse through, sanitize, and standardize hundreds of thousands of documents.

- Automated Development: Leveraging the newly released TestGPT feature, tests were automated in bulk with minimal to no human involvement, taking automated development to a new level.

Conclusion:

Being an early adopter of AI comes with its challenges. Ongoing code optimization is still necessary to perform efficiently and handle edge cases. Significant training is still required by TestGPT before receiving the desired results, but it continues to improve. As for Tabnine, complex bug resolutions were sometimes not accurately resolved.

However, despite these challenges, the benefits of programming efficiencies and streamlining a solution are undeniable. As AI technologies evolve, they will play an increasingly vital role in transforming how software solutions are delivered, particularly in sectors requiring high precision, speed, and scalability.

Copyright © 2024 REI Systems. All rights reserved.

The coding effort required for developing automated test scripts in Selenium is costly and requires specialized skills. In a 2023

The coding effort required for developing automated test scripts in Selenium is costly and requires specialized skills. In a 2023

AI-Powered Test Automation: Automating Test Creation

AI-Powered Test Automation: Automating Test Creation  Increased Developer Productivity: Our development team reported up to an estimated 70% increase in productivity using the AI coding assistant. Less time was spent researching functions, parameters, and syntax, and more time was saved figuring out API calls to external services.

Increased Developer Productivity: Our development team reported up to an estimated 70% increase in productivity using the AI coding assistant. Less time was spent researching functions, parameters, and syntax, and more time was saved figuring out API calls to external services.