Introduction

As government agencies increasingly embark on artificial intelligence (AI) initiatives, high-quality data and effective data management has become more critical than ever. AI systems rely heavily on high-quality, well-organized data to produce reliable insights. Agencies can significantly enhance their data management capabilities by adopting comprehensive storage solutions, robust integration techniques, advanced processing frameworks, and optimized architectural patterns. These technologies not only improve data accessibility, reliability, and efficiency but also provide the foundation necessary for successful AI implementation. Effective data management ensures informed decision-making, streamlined processes, and the ability to leverage AI across various governmental functions. This white paper builds upon previous discussions on Data Strategy and Data Governance. It explores how agencies can enhance their data management practices by supporting current operational needs and future AI-driven innovations, ultimately leading to more responsive, efficient, and intelligent government operations.

The Data Management Process

The Data Management Process (DMP) is a broad framework encompassing the entire data lifecycle, from initial capture to final utilization. This process is foundational to various contexts, including Information Systems in general, Data Modernization efforts, and the ongoing Sustainment of data assets. The graphic (Figure 1) illustrates the essential steps involved in data management. The process commences with Data Capture, where information is gathered from various sources.

This data is then subjected to Data Validation to ensure its accuracy and consistency. Next, the validated data undergoes Data Migration, a process of transferring it to the desired systems or locations. Data Enrichment follows, where additional relevant information is integrated to enhance the value and usability of the dataset. Subsequently, Data Cleansing removes any duplicates, errors, or inconsistencies. Finally, Data Verification confirms the quality and integrity of the data, ensuring it is ready for analysis and utilization. The overall process forms a comprehensive framework for managing data effectively, transforming raw information into a valuable asset for informed decision-making.

Data Management Challenges and Opportunities in the Public Sector

Effective Data Management in the public sector, however, is not without hurdles. Government agencies face several challenges, as referenced in Table 1, in managing data effectively. These challenges include data silos, data quality issues, data security concerns, and legacy systems. However, opportunities exist to address these challenges and improve data management practices.

REI Systems: Empowering Government with Data

REI Systems recognizes these challenges and has transformed data management and analytics for multiple agencies by prioritizing security, efficiency, and data quality. This has led to standardized processes, cost savings, and improved decision-making for agencies like the General Services Administration (GSA), the Federal Emergency Management Agency (FEMA), the Health Resources and Services Administration (HRSA), and NASA.

- GSA – Working with REI, GSA streamlined the Integrated Award Environment (IAE), a suite of systems supporting the federal awards process. Previously, information about awards was hosted in separate databases before, during, and after the process. The high volume and rapid influx of data made it challenging to process efficiently. REI helped GSA consolidate multiple systems and databases to support the entire award management lifecycle, from sourcing opportunities to awarding and monitoring. REI implemented a petabyte-scale data warehouse to integrate data from various sources into a single system. This solution enabled self-service reporting, enhanced data reliability, and provided tools for better decision making. As a result, business users, even those without technical expertise, could access and create reports independently, improving data reliability and accessibility.

- FEMA – REI collaborated with FEMA to develop the FEMA Data Exchange (FEMADex) pilot, a multi-cloud architecture replacing the legacy hardware-based system. This transition from on-premises data warehouses to cloud-based solutions addressed the previous lack of flexibility and scalability in managing diverse data volumes. The new platform provides unlimited compute resources, enabling multiple teams to work concurrently in shared workspaces, thus enhancing collaboration and efficiency. FEMADex pilot emerged as a high-availability platform supporting various data types, near real-time updates, and geospatial information for disaster response and recovery. Serving over 20,000 emergency managers, it ingests data from 107 sources, including $2.2B in grant disbursements and 129 disaster declarations. FedRAMP-compliant with 99.5% uptime, FEMADex efficiently handles concurrent user activity during disasters, marking a significant improvement in FEMA’s data management capabilities.

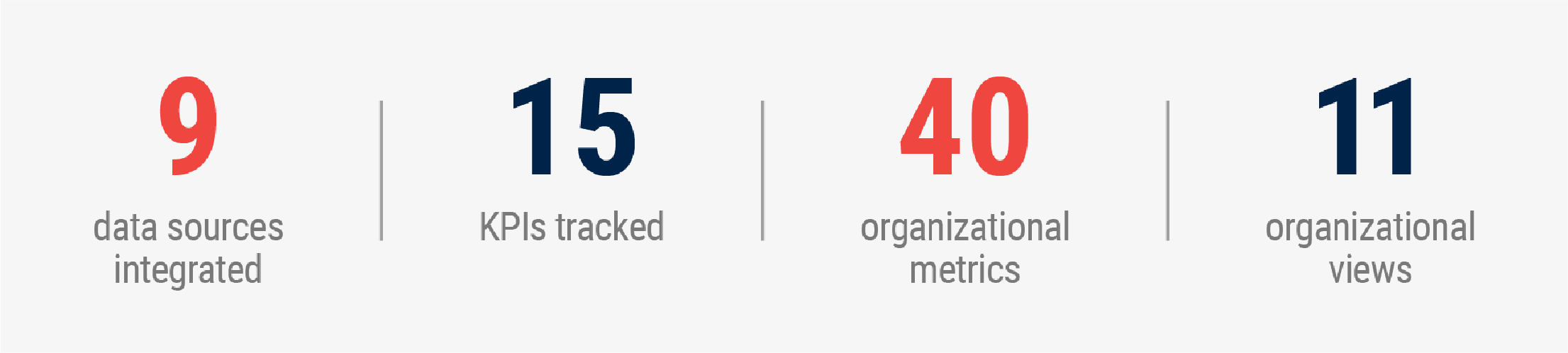

- HRSA – HRSA faced challenges similar to GSA IAE, but on a larger scale, with multiple bureaus requiring data visualization and decision-making tools. REI addressed this by integrating data from various sources into a central data warehouse, creating bureau-specific data marts for tailored visualizations. A comprehensive metadata framework managed information discovery and dissemination, ensuring proper governance for cross-bureau data sharing and viewing. REI designed Balanced Scorecards for HRSA’s Bureau of Primary Healthcare, providing instant health assessments through key performance metrics. These scorecards integrate nine data sources, track 15 KPIs with drilldowns to 40 organizational metrics, and offer 11 organizational views. The analytics and visualization approach prioritized audience needs, usability, data density, and consistency. This integrated platform maps Key Performance Indicators (KPIs) to organizational goals, enabling HRSA’s leadership to effortlessly monitor, analyze, drill down, access workflows, and implement data-guided actions. As a result, the agency can now make strategic decisions based on reliable, actionable data across all bureaus.

- NASA – REI significantly enhanced NASA’s data management capabilities through several key

initiatives. We created a comprehensive data warehouse that integrated information from multiple systems, including the SBIR/STTR program. This centralized repository enabled robust analytics and visualization using tools like Tableau, providing valuable insights to leadership. To ensure data integrity and security, we implemented strong data governance practices. A major achievement was the successful migration from Oracle to PostgreSQL, which we accomplished using an innovative, automated solution. Leveraging open-source software (Pentaho/Kettle) and modular R scripts, we migrated 2,600 tables, reducing the effort by 95% and completing the task in just four hours. Our services also included developing APIs, ETL solutions, and interfaces with external systems such as NASA’s Account Management System and Technology Transfer System, further enhancing the agency’s data management ecosystem.

REI Systems’ Comprehensive Data Management Framework (DMF)

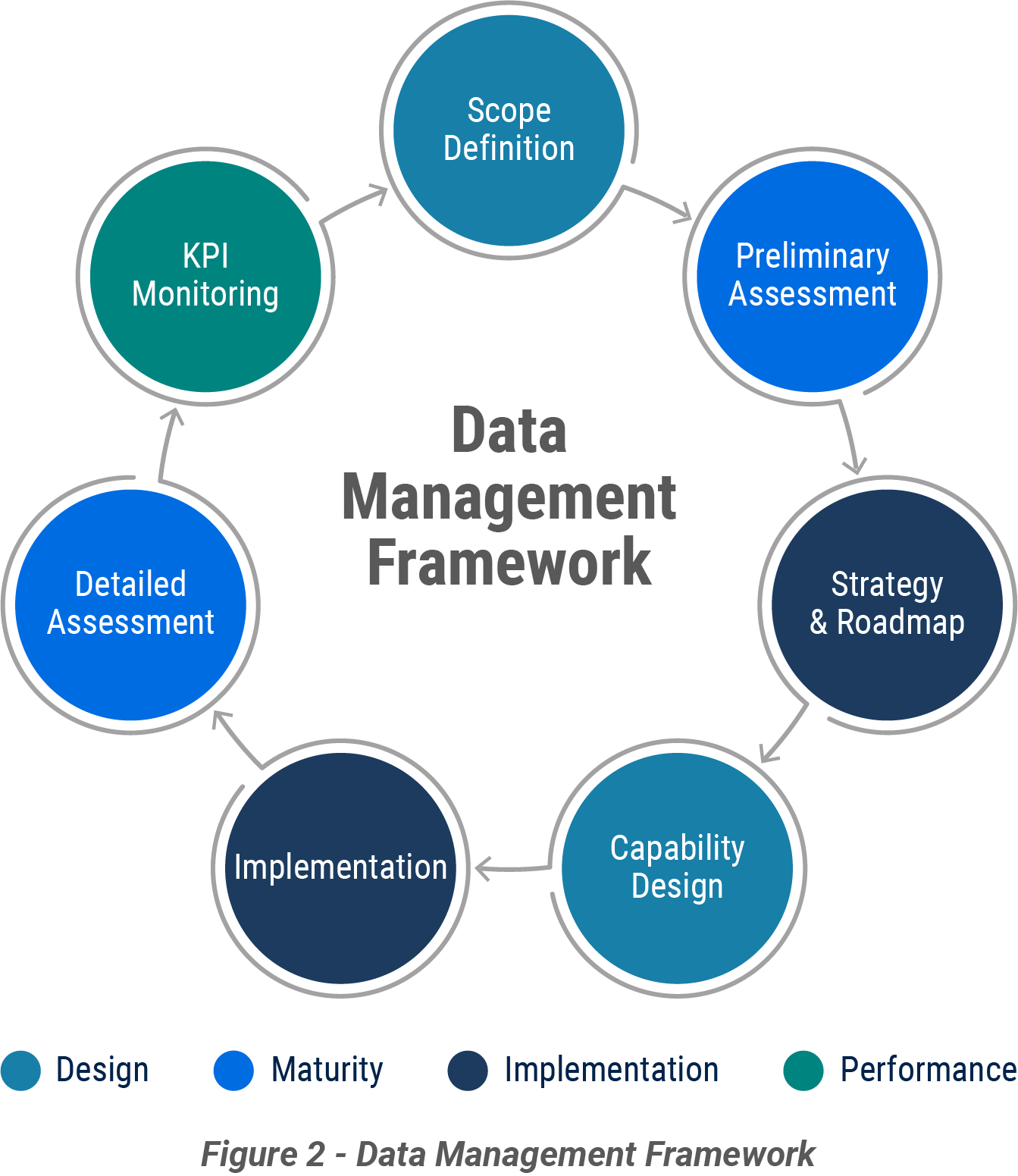

REI Systems recognizes that effective data management requires a tailored approach that addresses each agency’s unique needs and challenges. To that end, we offer a comprehensive data management framework encompassing all aspects of the data lifecycle, from acquisition and storage to analysis and utilization. Our approach is guided by a proven 7-step cyclical process for establishing and sustaining a data management function within an organization.

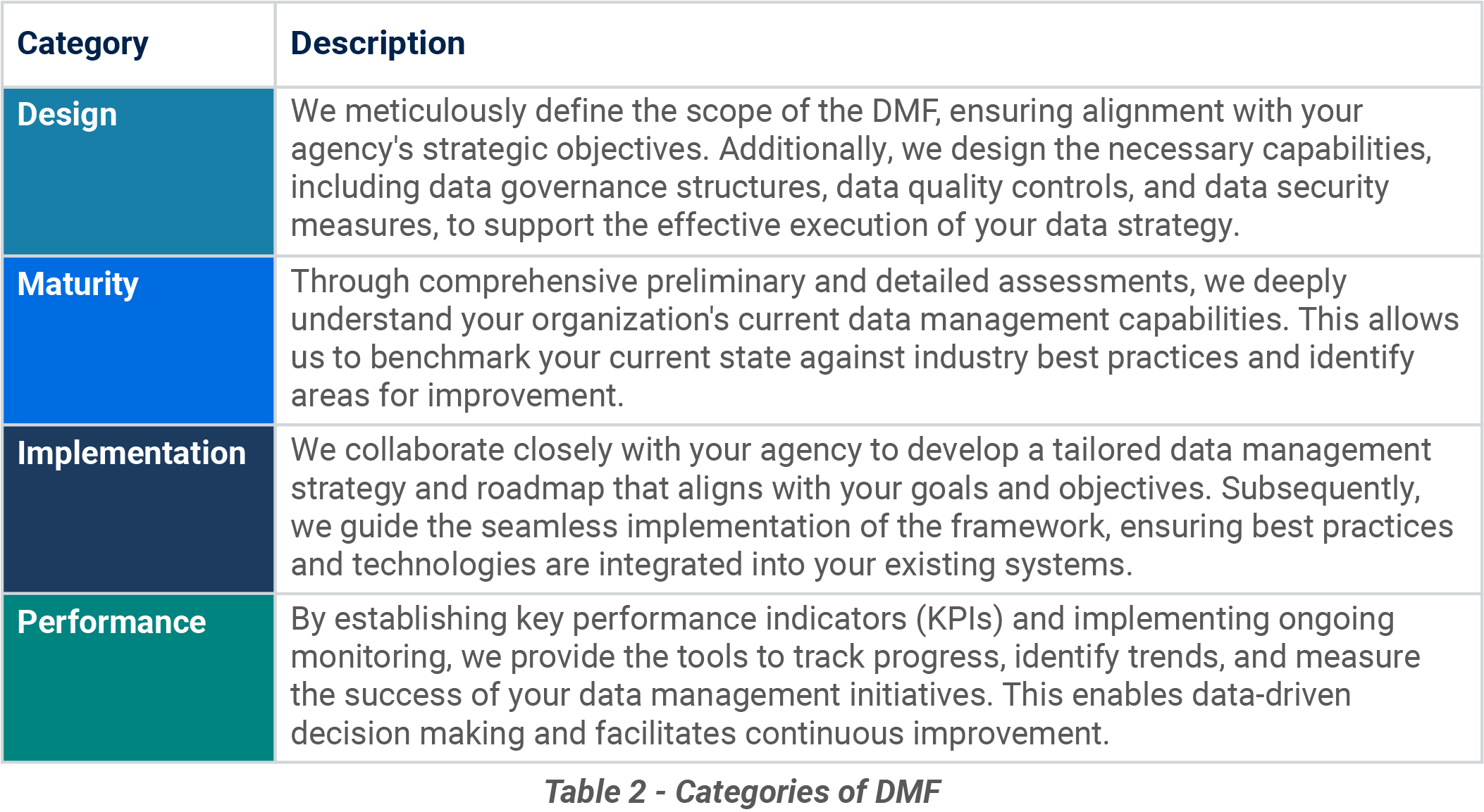

This process, visually represented in Figure 2, is systematically organized into four key categories, as shown in Table 2.

This cyclical process fosters iterative refinement and adaptation, ensuring your data management practices evolve with your agency’s dynamic needs and objectives. Furthermore, by integrating data management maturity assessments at both the preliminary and detailed stages, we provide a structured approach to measure progress and identify opportunities for continuous improvement.

For example, HRSA faced the challenge of integrating data from multiple bureaus and providing tailored data visualizations and decision-making tools. This aligns with the ‘Design’ category of the DMF, where the scope is defined, and capabilities like data governance and integration are designed. REI Systems’ solution involved creating a central data warehouse and bureau-specific data marts, addressing the need for data integration and accessibility, which falls under the ‘Implementation’ category of the DMF. The development of a metadata framework for governance and the Balanced Scorecards for performance tracking aligns with the ‘Maturity’ and ‘Performance’ categories, respectively. The scorecards, integrating multiple data sources and KPIs, enable HRSA leadership to monitor, analyze, and make data-driven decisions, showcasing the successful outcome of the DMF implementation.

Data Management Strategy

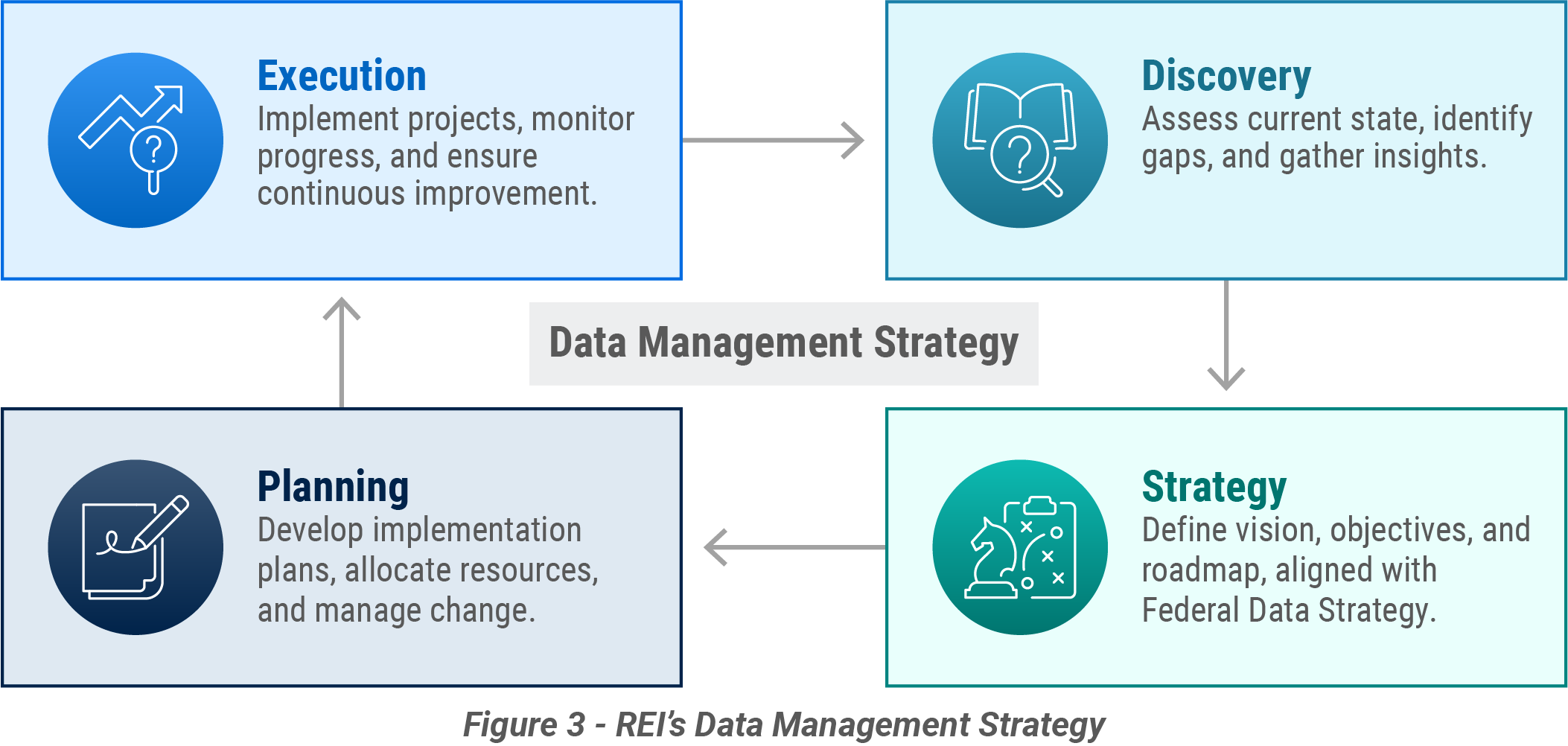

Building upon the robust Data Strategy and Data Governance Framework defined in Part 1 of this white paper series, a well-defined Data Management Strategy (DMS) operationalizes those principles, ensuring effective execution and alignment with the Federal Data Strategy. As visually represented in Figure 3, REI Systems’ approach to crafting a Data Management Strategy involves a collaborative and tailored process that bridges the gap between strategic vision and practical implementation.

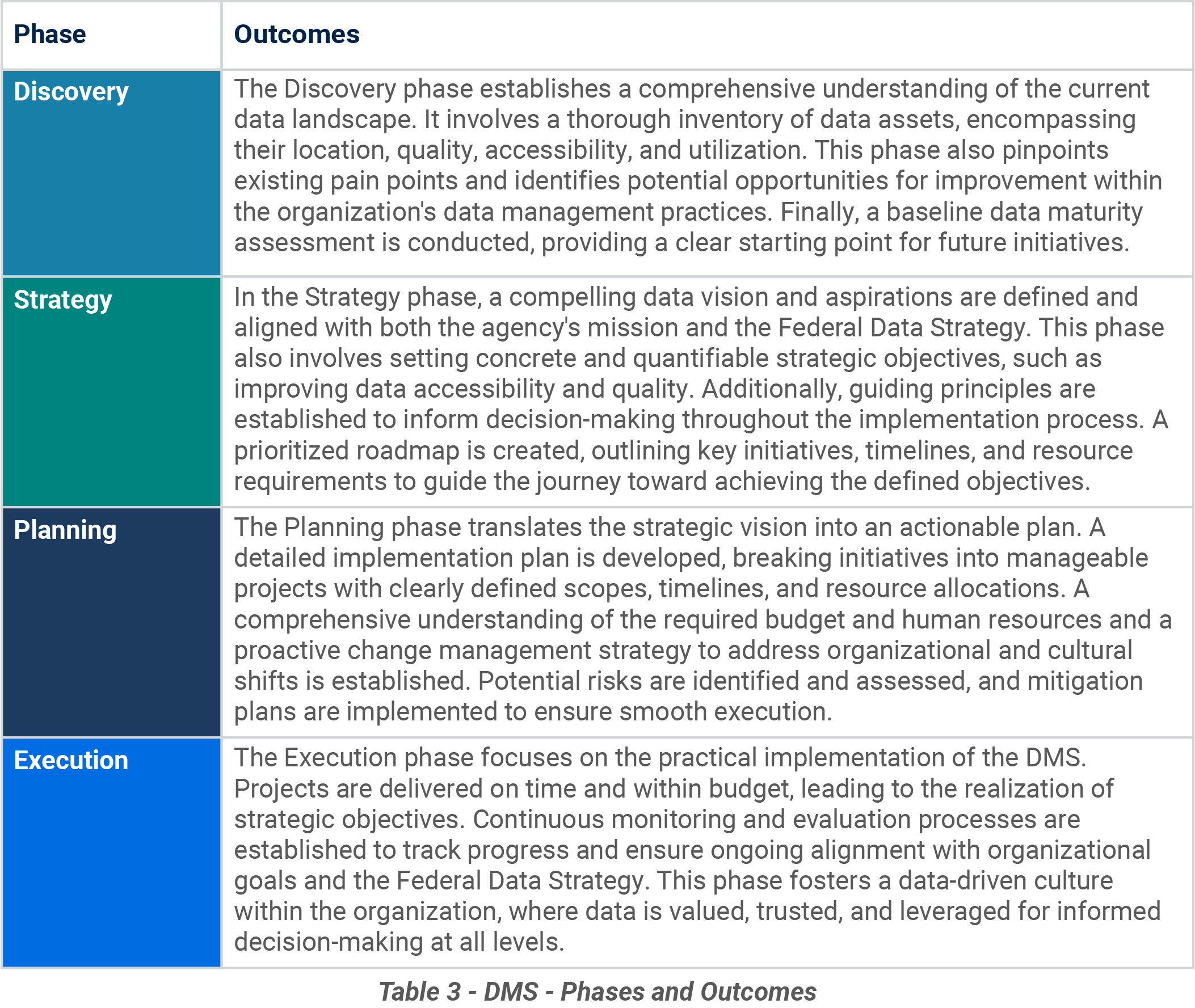

Each phase of the DMS yields distinct outcomes as laid out in Table 3, building a foundation for datadriven success:

Data Management Maturity Model

While a well-defined Data Management Strategy charts the course, understanding an agency’s currentcapabilities and plotting a clear path toward improvement is essential for successful execution. The Data Management Maturity Model (DMMM) provides a valuable framework for this assessment and guides agencies on their journey toward data-driven excellence. A DMMM is a multi-level model that evaluates an organization’s data management practices across various dimensions, including data governance, architecture, quality, security, and operations. Each level represents a progressive stage of maturity, with higher levels signifying greater sophistication and effectiveness in data management. The five-level DMMM is similar to the software engineering framework outlined by the Capability Maturity Model Integration (CMMI) Institute:

- Level 1: Ad Hoc: Data management practices are inconsistent, reactive, and lack formal structure. Data is often siloed, with limited visibility and control.

- Level 2: Defined: Basic data management processes are in place, but they may be fragmented and lack standardization. Data quality issues persist, and there is limited integration across systems.

- Level 3: Managed: Data management processes are standardized and integrated. Data quality is actively managed, and there is a focus on data security and compliance.

- Level 4: Optimized: Data management is a core competency focusing on continuous improvement and innovation. Data is treated as a strategic asset, and data-driven insights inform decisionmaking across the agency.

- Level 5: Transformational: Data management fully integrates into the agency’s culture and operations. Data is leveraged to drive innovation, anticipate future needs, and proactively respond to emerging challenges.

REI will work with the agency stakeholders to choose the best DMMM that addresses their specific data management opportunities and challenges. Our comprehensive assessment process involves in-depth interviews and surveys, documentation review, and technology evaluation. Based on the assessment findings, REI Systems collaborates with the agency to develop a customized roadmap for advancement. This roadmap outlines specific actions, timelines, and resources required to achieve higher levels of data management maturity. We provide ongoing support and guidance throughout the journey, ensuring the agency stays on track and achieves its data management goals. Progressing through the levels of data management maturity yields significant benefits for federal agencies, such as:

Approach to Reach End State

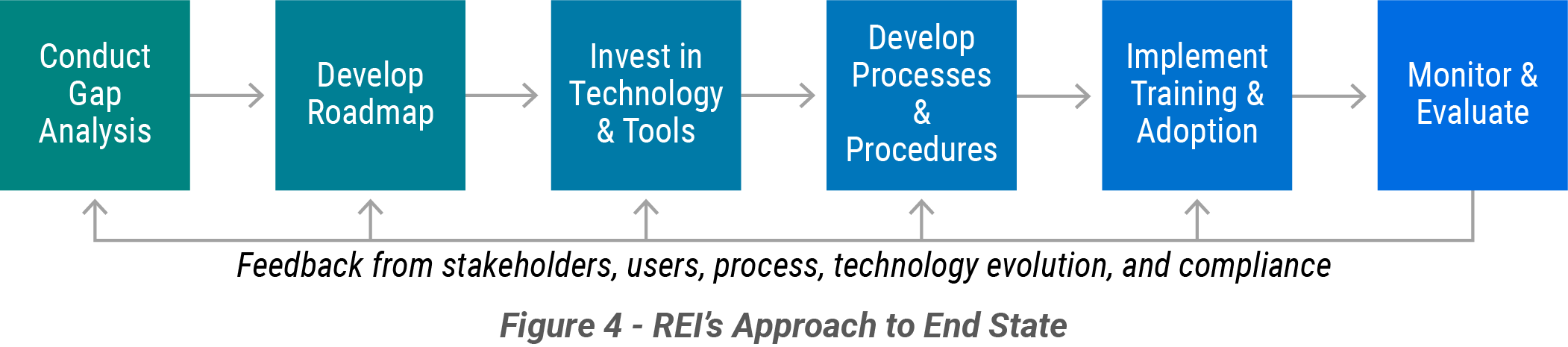

To translate the insights gained from the DMMM assessment into actionable steps and achieve the desired end state of data management maturity, REI Systems follows a structured and collaborative six-step approach, visually outlined in Figure 4.

The process is iterative, incorporating feedback loops to ensure continuous adaptation and improvement.

- Conduct Gap Analysis: To identify areas requiring improvement, a thorough assessment of the current data management capabilities against target maturity levels is conducted.

- Develop Roadmap: A detailed roadmap is created, outlining the specific steps, timelines, and milestones necessary to bridge the identified gaps and reach the target maturity levels.

- Develop Processes & Procedures: Clear and well-defined data management processes and procedures are established and documented to ensure consistent and effective data handling and management across the organization.

- Invest in Technologies & Tools: Strategic investments are made in appropriate data management technologies and tools to support data integration, quality management, security, and analytics.

- Implement Training & Adoption: Comprehensive training and adoption strategies ensure staff understand and can effectively utilize the new tools and processes.

- Monitor & Evaluate: Progress is continuously monitored and evaluated, incorporating feedback from stakeholders, users, and technological advancements to facilitate ongoing improvement and adaptation.

This cyclical and adaptive approach enables agencies to progressively enhance their data management capabilities, ensuring alignment with evolving needs and best practices.

For example, the NASA case study exemplifies REI Systems’ methodical approach to achieving data management maturity. The initial gap analysis pinpointed the need for data consolidation, improved accessibility, and system modernization. This analysis informed the development of a strategic roadmap, which guided the subsequent investment in a comprehensive data warehouse, advanced analytics tools (Tableau), and opensource migration software. The implementation of robust data governance practices further solidified the commitment to data integrity and security. The successful adoption of these new tools and processes involved comprehensive training and change management efforts. The NASA project implemented continuous monitoring and evaluation processes to track progress and ensure alignment with goals, underscoring the iterative nature of the approach and the importance of these metrics for continuous improvement. In essence, the NASA case study showcases how REI Systems’ six-step approach can effectively guide agencies through data management challenges, facilitating progress towards higher levels of data maturity and enabling them to fully leverage their data assets.

Enhancements for AI Support: Building a Solid Data Foundation

Artificial Intelligence (AI) and Machine Learning (ML) hold immense potential for transforming government operations, enabling agencies to gain deeper insights, automate processes, and make more informed decisions. However, the success of AI initiatives is inextricably linked to the quality and accessibility of the underlying data. A robust data management foundation is crucial for unlocking the full potential of AI and ensuring its effectiveness within the federal government context.

Data Preparation for AI: The Cornerstone of Success

Data preparation is the critical first step in any AI implementation. It involves transforming raw data into a usable format from which AI algorithms can effectively process and learn. Key components of data preparation for AI include:

- Data Cleansing: Identifying and rectifying errors, inconsistencies, and missing data values to ensure accuracy and reliability. AI models are highly sensitive to data quality; even minor errors can significantly impact their performance.

- Data Integration: Combining data from disparate sources into a unified and consistent format that AI algorithms can readily understand. This process often involves resolving inconsistencies in data formats, structures, and semantics.

- Data Quality Assurance: Implementing ongoing processes to monitor and maintain data quality throughout the AI lifecycle. This includes establishing data quality metrics, implementing validation rules, and conducting regular audits.

REI Systems’ AI Expertise: Empowering AI-Driven Transformation

- Assess data readiness: Evaluate existing data assets’ quality, completeness, and suitability for AI applications.

- Develop data preparation pipelines: Design and implement automated pipelines to cleanse, integrate, and transform data into AI-ready formats.

- Implement data quality controls: Establish robust data quality assurance processes to maintain data integrity and accuracy throughout the AI lifecycle.

- Integrate AI into workflows: Seamlessly incorporate AI capabilities into existing data management workflows to enable intelligent automation, predictive analytics, and decision support.

Conclusion

Effective data management is the backbone of modern government operations, enabling data-driven decision making, improving service delivery, and ultimately enhancing mission outcomes. By adopting modern data management practices, technologies, and tools, agencies can unlock the full potential of their data assets, driving innovation and achieving operational excellence. As agencies prepare to embrace AI and other advanced technologies, a robust data architecture and stringent data quality controls are imperative. These foundational elements ensure agencies can effectively leverage AI to drive innovation, optimize efficiency, and meet mission needs. The importance of data management will only continue to grow as we navigate the future of government operations. It lays the groundwork for successful AI and machine learning integration, a topic we’ll explore in Part 3 of this series.

REI Systems stands ready to partner with agencies on their data management journey, providing expertise and solutions to help them thrive. By investing in data management today, agencies can position themselves for success, unlocking greater efficiencies, improving citizen services, and informing data-driven policy decisions that will shape the future of our nation.

About the Author

Ramakrishnan (Ramki) Krishnamurthy, Data Analytics Lead, REI Systems

Ramakrishnan (Ramki) Krishnamurthy, Data Analytics Lead, REI SystemsMr. Krishnamurthy is an accomplished technology leader with 25+ years of experience defining and executing transformative data strategies for government organizations, including Fannie Mae, HRSA, and FEMA.

Contact him at: rkrishnamurthy@reisystems.com

Copyright © 2024 REI Systems. All rights reserved.

For example, the NASA case study exemplifies REI Systems’ methodical approach to achieving data management maturity. The initial gap analysis pinpointed the need for data consolidation, improved accessibility, and system modernization. This analysis informed the development of a strategic roadmap, which guided the subsequent investment in a comprehensive data warehouse, advanced analytics tools (Tableau), and opensource migration software. The implementation of robust data governance practices further solidified the commitment to data integrity and security. The successful adoption of these new tools and processes involved comprehensive training and change management efforts. The NASA project implemented continuous monitoring and evaluation processes to track progress and ensure alignment with goals, underscoring the iterative nature of the approach and the importance of these metrics for continuous improvement. In essence, the NASA case study showcases how REI Systems’ six-step approach can effectively guide agencies through data management challenges, facilitating progress towards higher levels of data maturity and enabling them to fully leverage their data assets.

For example, the NASA case study exemplifies REI Systems’ methodical approach to achieving data management maturity. The initial gap analysis pinpointed the need for data consolidation, improved accessibility, and system modernization. This analysis informed the development of a strategic roadmap, which guided the subsequent investment in a comprehensive data warehouse, advanced analytics tools (Tableau), and opensource migration software. The implementation of robust data governance practices further solidified the commitment to data integrity and security. The successful adoption of these new tools and processes involved comprehensive training and change management efforts. The NASA project implemented continuous monitoring and evaluation processes to track progress and ensure alignment with goals, underscoring the iterative nature of the approach and the importance of these metrics for continuous improvement. In essence, the NASA case study showcases how REI Systems’ six-step approach can effectively guide agencies through data management challenges, facilitating progress towards higher levels of data maturity and enabling them to fully leverage their data assets.